We'll be using some traditional data science techniques today to analyze the American Association of Individual Investors (AAII) Survey. This is a survey that comes out every Thursday and presents individual investor expectations of market direction over the six months or so. It is split into Bullish, Neutral, and Bearish readings expressed as percentages. There is also a Bull vs. Bear spread. Institutional investors use the survey as a contrarian indicator since it represents the views of retail investors, aka dumb money. Hence, extreme bull or bear readings might indicate market tops or bottoms that can be used to time sells or buys, respectively.

Despite the modestly pejorative under tone, there is some logic to this. Institutional investors have an information edge—they meet with management teams, speak to other investors, spend tons of money on data gathering and synthesis—that retail investors don't have. Hence, the institutions should be first movers. By the time the information has passed through the sieve of news media, pundits, and talking heads such that retail investors are acting on it, the last incremental buy or sell has happened. Time to move on.

Such logic fails to account for the fact that institutional investors are often retail investors in their personal accounts and many sophisticated investors (read high net worth) invest money through traditional retail brokerages. There are, of course, institutional surveys of similar ilk. But these are not broadly disseminated or not available for public consumption. Finally, figuring out whether the respondents are talking their own book or the opposite to deceive other investors is even more complicated than figuring out Keynes' Beauty Contest.

Whatever the case, as the survey is widely reported in the financial press and gets plenty of air time from investment strategy wonks, it might have some predictive value. Let's find out.

The AAII kindly allows us to download the data, so the source for all of the sentiment data in the proceeding graphs as comes from the AAII. Looking at the data we have noticed that the dates and prices of the S&P don't align so we're using the yfinance package to bring in prices and then aligning the survey results with those prices. As mentioned above, the survey is released on Thursday before the open, so if it is a market mover, we should expect to see some response either vs. the prior close or vs. the open. We might also see some drift from that initial response to the close one week hence before the next survey is released. Now let's get into the data.

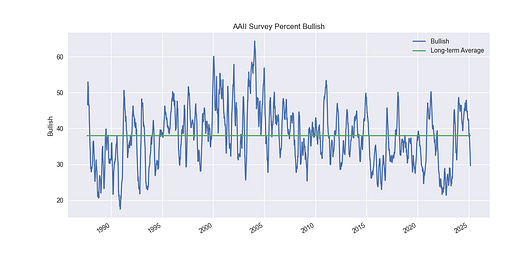

First, we show the 8-week moving average of the Bullish percent along with the long-term average, which is roughly 38%.

And here's a similar graph for the Bull-Bear Spread. The average here is about 7%.

Now, let's see what impact the survey has on returns from the prior day and after the open relative to the SPY, the ETF the longest running ETF that tracks the S&P 500. We randomly select 3,000 data points for ease of viewing and then plot the returns on a close-to-close and open-to-close basis vs. the Bullish reading below.

As one can see, there's a fair amount of scattering above and below the average returns, which happens to be around 0%. One would expect there to be lower returns for readings above the long-term average, but that doesn't appear to be the case. There are some significant jumps at the low end but there are large drops too. In all, this appears to show the survey has limited predictive value. What about comparing the open-to-close and the next week return? We do so in the graph below.

The graphs exhibit similar noise, and, as such, we see very little to suggest the AAII Survey has any predictive value. We'll save readers their eyesight by not presenting the graphs for other markers like the Bearish percentage or the Bull-Bear Spread. The results are quite similar to what we've already presented.

Let's take one final look at whether the returns on the day of the announcement vs. the prior day exhibit any predictive signal for the following week. The logic here is that while we don't have the data to analyze the amount of surprise, if any, between the posted results and expectations, perhaps a large move would yield a continuation or reversal. For this we graph the next week return vs. the return from the prior close along with the regression equation and the a 45-degree line for grounding.

Now this is more interesting. If we look at the regression equation shown on the graph, we see that there is some follow-through in the next week based on the return from the prior day. That follow-through tends to be about half what the initial value is plus a modest adder. And, the it appears statistically significant (p-value < 0.05). The 45-degree line shows what the follow-through would be if it were a 1-to-1 relationship. Of course, it is hard to know if this is actually a tradeable signal without more work. Indeed, that follow-through might simply be an artifact of the generally upward trend in the S&P, a spurious correlation.

Whatever the case, we have some interesting takeaways. While we don't see much evidence that the AAII Survey has predictive power, there are some clues that the market reaction might indicate direction in the subsequent week. But this would need to be tested further to prove it so. Clearly, this was not an exhaustive analysis. We could have cut the data into finer pieces to separate the tastiest morsels from the filler. Additionally, one might question if the S&P is the right index to test this. Perhaps a small cap is better. Over to you. What do you think we've missed? What should be add? Let us know!

Code below.

# Import packages

import numpy as np

import pandas as pd

import statsmodels.api as sm

import matplotlib.pyplot as plt

import yfinance as yf

# Assign chart style

plt.style.use('seaborn-v0_8')

plt.rcParams["figure.figsize"] = (12,6)

# Create handy functions

def clean_price_data(data):

df = data[['Open', 'Close']]

df.columns = df.columns.droplevel("Ticker")

df.columns.name = None

df.columns = ['open', 'close']

return df

def get_impact(dataf):

# Create a new column for event dates (forward-fill)

dataf["last_event_date"] = dataf.index.to_series().where(dataf["bullish"].notna()).ffill()

# Get the next event date

dataf["next_event_date"] = dataf["last_event_date"].where(dataf["bullish"].notna()).shift(-1)

# Identify the **day before the next event** (must be in dataf.index)

dataf["pre_next_event_date"] = dataf["next_event_date"].apply(lambda x: dataf.index[dataf.index < x].max() if pd.notna(x) else None)

# Merge stock prices for the event and pre-next-event

event_prices = dataf[["close"]].rename(columns={"close": "event_close"})

dataf = dataf.merge(

event_prices.rename(columns={"event_close": "last_event_close"}),

left_on="last_event_date",

right_index=True,

how="left"

)

dataf = dataf.merge(

event_prices.rename(columns={"event_close": "pre_next_event_close"}),

left_on="pre_next_event_date",

right_index=True,

how="left"

)

# Calculate return

dataf["post_ret"] = np.log(dataf["pre_next_event_close"] / dataf["last_event_close"])

dataf["post_ret"] = dataf["post_ret"].bfill()

dataf['impact'] = np.log(dataf.loc[~dataf['bullish'].isna(), 'close']/dataf.loc[~dataf['bullish'].isna(), 'open'])

dataf['ret'] = np.log(dataf['close']/dataf['close'].shift(1))

for sentiment in ['bullish', 'bearish', 'bull_bear_spread']:

if sentiment != 'bull_bear_spread':

dataf[f'{sentiment[:4]}_chg'] = dataf.loc[dataf[sentiment].notna(), sentiment].diff()

else:

dataf['spread_chg'] = dataf.loc[dataf[sentiment].notna(), sentiment].diff()

return dataf

def quick_plot(dataf, x_col_1, y_col_1, y_col_2, xlab='Bullish', ylab1="Close-to-close", ylab2="Open-to-close",

jitter=0.02, sample_size=1000, seed=42, save_fig=False, fig_name=None):

np.random.seed(seed) # For reproducibility

# Randomly sample data if it has more than the desired sample size

if len(dataf) > sample_size:

dataf = dataf.sample(sample_size, random_state=seed)

# Add jitter to y-values

x1_jittered = (dataf[x_col_1] + np.random.normal(0, jitter * (dataf[x_col_1].std()), size=len(dataf)))*100

y1_jittered = (dataf[y_col_1] + np.random.normal(0, jitter * (dataf[y_col_1].std()), size=len(dataf)))*100

y2_jittered = (dataf[y_col_2] + np.random.normal(0, jitter * (dataf[y_col_2].std()), size=len(dataf)))*100

fig, ax = plt.subplots(2, 1, sharex=True)

# Scatter plots with jitter

ax[0].scatter(x1_jittered, y1_jittered, alpha=0.6)

ax[0].axhline(np.mean(dataf[y_col_1]), color='r', linestyle="--", label="Mean", linewidth=0.8)

ax[0].set_ylabel(f"{ylab1} (%)")

ax[0].legend()

ax[1].scatter(x1_jittered, y2_jittered, alpha=0.6)

ax[1].axhline(np.mean(dataf[y_col_2]), color='r', linestyle="--", label="Mean", linewidth=0.8)

ax[1].set_ylabel(f"{ylab2} (%)")

ax[1].set_xlabel(xlab)

ax[1].legend()

ax[0].set_title(f" Returns vs. {xlab} sentiment")

plt.tight_layout()

if save_fig:

plt.savefig(f"botw/{fig_name}.png")

plt.show()

def impact_plot(dataf, x_col, y_col, title_text, jitter=0.001, sample_size=3000, seed=42,

save_fig=False, fig_name=None):

np.random.seed(seed) # For reproducibility

clean_dat = dataf[[x_col, y_col]].dropna()

# Randomly sample data if it has more than the desired sample size

if len(clean_dat) > sample_size:

dat = clean_dat.sample(sample_size, random_state=seed)

else:

dat = clean_dat.copy()

# Add jitter to values

x_jitter = (dat[x_col] + np.random.normal(0, jitter * (dat[x_col].std()), size=len(dat))) * 100

y_jitter = (dat[y_col] + np.random.normal(0, jitter * (dat[y_col].std()), size=len(dat))) * 100

# Perform OLS regression to get the p-value

X = sm.add_constant(x_jitter) # Add intercept term

model = sm.OLS(y_jitter, X).fit()

slope, intercept = model.params[1], model.params[0]

p_value = model.pvalues[1] # p-value of the independent variable

# Generate x-values for the regression line

x_range = np.linspace(x_jitter.min(), x_jitter.max(), 100)

y_pred = intercept + slope * x_range

# Create plot

plt.figure()

plt.scatter(x_jitter, y_jitter, alpha=0.5, label="Data")

# Regression line

reg_line_plot, = plt.plot(x_range, y_pred, 'r--', linewidth=1, label="Regression Line")

# 45-degree line

min_val, max_val = min(x_jitter.min(), y_jitter.min()), max(x_jitter.max(), y_jitter.max())

diag_line_plot, = plt.plot([min_val, max_val], [min_val, max_val], 'purple', linestyle='dashed', linewidth=1, label="45-degree Line")

# Display regression equation

equation_text = f"Equation: \ny = {slope:.2f}x + {intercept:.2f} \nP-value of X = {p_value:.4f}"

plt.text(1.05 * min_val, 0.75 * max_val, equation_text, fontsize=10, color='red')

# Labels and legend

plt.xlabel("Day of Return (%)")

plt.ylabel("Next Week Return (%)")

plt.legend(handles=[reg_line_plot, diag_line_plot], loc='upper left', ncol=2)

plt.title(title_text)

if save_fig:

plt.savefig(f"botw/{fig_name}.png")

plt.show()

# Load data

# Download the sentiment data from: https://www.aaii.com/files/surveys/sentiment.xls

data = pd.read_csv("path/to/data/sentiment.csv", parse_dates=True, index_col='date').iloc[:,:12]

data = data.loc[~data.index.isna()]

# Plot historical sentiment data

(data[['bullish', 'bullish_avg']].rolling(8).mean()*100).plot()

plt.xlabel('')

plt.ylabel('Bullish')

plt.legend(['Bullish', 'Long-term Average'])

plt.title("AAII Survey Percent Bullish")

plt.show()

(data[['bull_bear_spread']].rolling(8).mean()*100).plot()

plt.hlines(y=data[['bull_bear_spread']].mean()*100, xmin=data.index.min(), xmax=data.index.max(), colors='green')

plt.xlabel('')

plt.ylabel('Spread')

plt.legend(['Spread', 'Spread Average'])

plt.title("AAII Survey Bull-Bear Spread")

plt.show()

# Load ETFs

start = data.index.min()

end = data.index.max() + pd.offsets.BDay(1)

spy = yf.download('SPY', start, end)

# Create first dataframe

df = clean_price_data(spy)

df = pd.merge(df, data[['bullish', 'bearish', 'neutral', 'bull_bear_spread']], how="left", left_index=True, right_index=True)

df = get_impact(df)

# Plot market impact close-to-close and open-to-close

quick_plot(df, "bullish", "ret", "impact", sample_size=3000)

# Plot market impact open-to-close and next week close

quick_plot(df, "bullish", "impact", "post_ret", sample_size=3000, ylab1="Open-to-close", ylab2="Next week",

save_fig=True, fig_name="spy_bull_open2close_post_ret")

# Plot next week return vs. close-to-close return

impact_plot(df, 'ret', 'post_ret', "Following week vs. prior close Returns",

save_fig=True, fig_name="spy_close2close_nextweek_impact")